Terraforming the Azure Open AI service Deployment via AzAPI and AzureRM providers for the Enterprise… and chat GPT model

Hey there! Have you heard of Azure Open AI? It’s an awesome platform developed by Microsoft that helps businesses of all sizes and industries leverage the power of artificial intelligence (AI) to achieve their digital transformation goals. With Azure Open AI, organizations can automate tasks, gain insights, and make better decisions faster than ever before.

One of the coolest things about Azure Open AI is that it’s designed to be super flexible and scalable, making it a popular choice for enterprises looking to adapt quickly to changing market conditions. Plus, it includes advanced security features like encryption and access controls to help protect customer data and systems from cyber threats.

Here are some of the ways that security is managed for the service to be operational.

- Encryption: Azure Open AI uses encryption to protect data both in transit and at rest. This helps prevent unauthorized access to sensitive data.

- Access Controls: Azure Open AI includes a range of access controls to help prevent unauthorized access to customer data and systems. This includes role-based access controls, multi-factor authentication, and network security groups.

- Threat Detection: Azure Open AI includes advanced threat detection capabilities that can help organizations detect and respond to potential security threats. This includes real-time threat monitoring, security alerts, and automated threat response.

- Data Protection: Azure Open AI includes a range of data protection features, including backup and disaster recovery, to help ensure that customer data is protected in the event of a data loss or system failure.

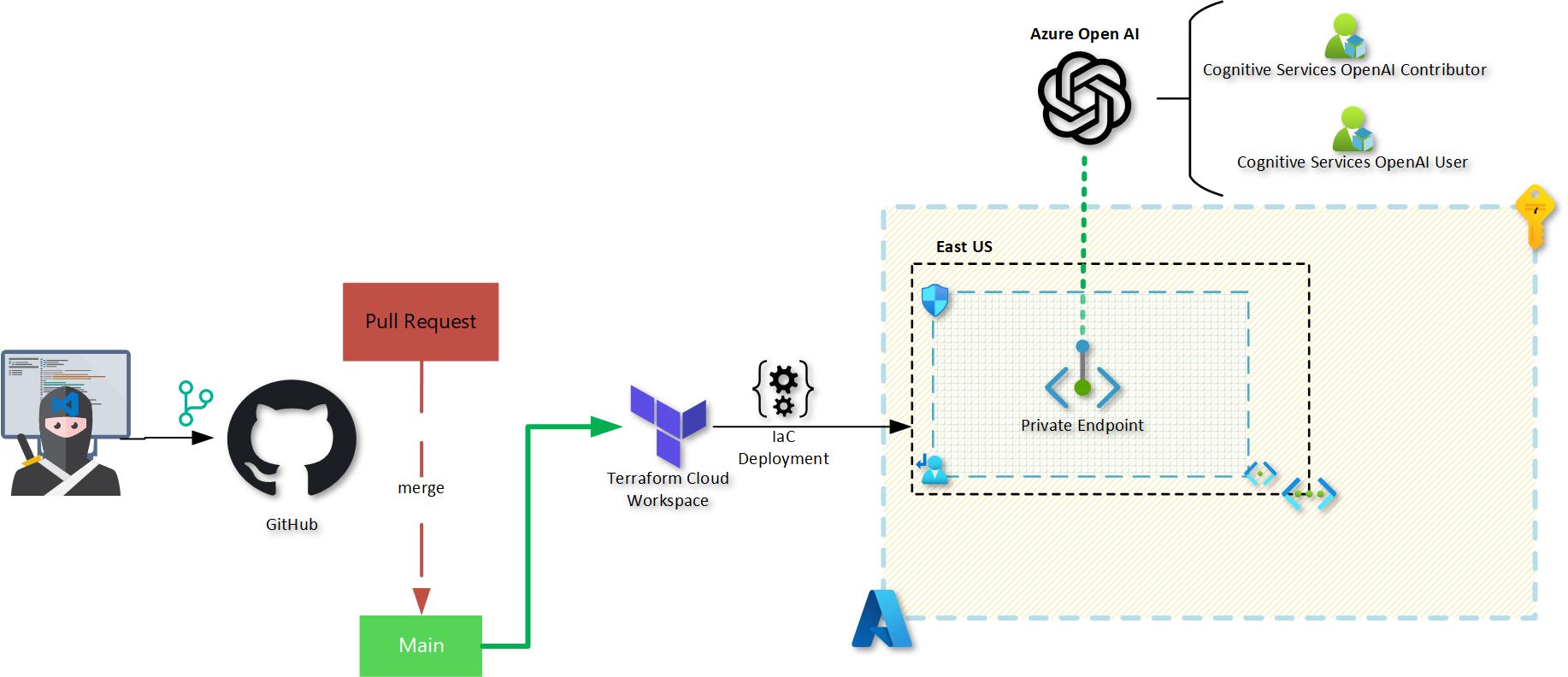

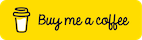

The diagram below depicts the architecture to be deployed for the Azure Open AI Account. By the way, I chose East US as Chat GPT is currently in preview in that region.

One of the key features is that Azure Open AI supports Private Endpoints, which let you restrict access to your Azure Open AI services to only those resources within your virtual network that require access. This keeps your services safe and secure, while still allowing authorized resources to access what they need.

For that reason, I will be focusing on the deployment of Azure Open AI using the Terraform AzureRM provider and Terraform AzAPI provider to enable and manage this feature. I did not know this was already possible when I started exploring, so I have included both deployment methods. However, I did notice a significant difference in deployment time: the AzAPI provider was much faster than the AzureRM provider.

Azure Open AI Onboarding 🕵️♀️

To get started with Azure Open AI, follow these steps:

- Have your Azure subscription ID ready to get onboarded.

- Fill out the Request Access Form. It takes a couple of days to get a response.

- If you are an Enterprise customer and want to ensure your data is not processed by Microsoft for abuse monitoring, you can opt out by filling out this form.

- I already have my Terraform Cloud Org set up for my deployment. 😎

- Deploy the Azure Open AI service via Terraform.

- Deploy the model to be used via Terraform.

ℹ️ Data Privacy:

For important information regarding data privacy, please refer to the Azure OpenAI Data Privacy documentation.

Terraform Configuration Using AzAPI Provider 👨💻

When I first looked into deploying Azure Open AI with Terraform, I noticed the AzureRM provider did not have an OpenAI resource. So, my first attempt was with the AzAPI provider. The code I used is below. (I have more AzAPI examples in earlier articles if you’re interested.)

Azure Open AI Account Example:

# Resource Group for Cognitive Services

resource "azurerm_resource_group" "openai" {

name = "cerocool-ai-rg"

location = "eastus"

}

# Azure Open AI Account

resource "azapi_resource" "openai_account" {

type = "Microsoft.CognitiveServices/accounts@2022-12-01"

name = "cerocoolai-azapi"

parent_id = resource.azurerm_resource_group.openai.id

location = "eastus"

body = jsonencode({

sku = {

name = "S0"

}

kind = "OpenAI"

properties = {

publicNetworkAccess = "Disabled"

customSubDomainName = "ceroazapi"

}

})

}

# Azure Open AI Model - Chat GPT

resource "azapi_resource" "chatgpt_model_azapi" {

type = "Microsoft.CognitiveServices/accounts/deployments@2022-12-01"

name = "cero_chatgpt_model"

parent_id = resource.azapi_resource.openai_account.id

body = jsonencode({

properties = {

model = {

format = "OpenAI",

name = "gpt-35-turbo"

version = "0301"

}

scaleSettings = {

scaleType = "Standard"

}

}

})

}

Terraform Configuration using AzureRM Provider 👷

After digging a bit more I realise you can use Cognitive Services resource to deploy the resource😎.

### Resource Group for Cognitive Services

resource "azurerm_resource_group" "openai" {

name = "cerocool-ai-rg"

location = "eastus"

}

### Azure Open AI Account

resource "azurerm_cognitive_account" "openai" {

name = "cerocoolai-azurerm"

location = "eastus"

resource_group_name = azurerm_resource_group.openai.name

kind = "OpenAI"

custom_subdomain_name = "ceroaiazurerm"

sku_name = "S0"

public_network_access_enabled = false

identity {

type = "SystemAssigned"

}

tags = {

Acceptance = "Test"

}

}

### Azure Open AI Model - Chat GPT

resource "azurerm_cognitive_deployment" "chatgpt_model_azurerm" {

name = "cero_chatgpt_model"

cognitive_account_id = resource.azurerm_cognitive_account.openai.id

model {

format = "OpenAI"

name = "gpt-35-turbo"

version = "0301"

}

scale {

type = "Standard"

}

}

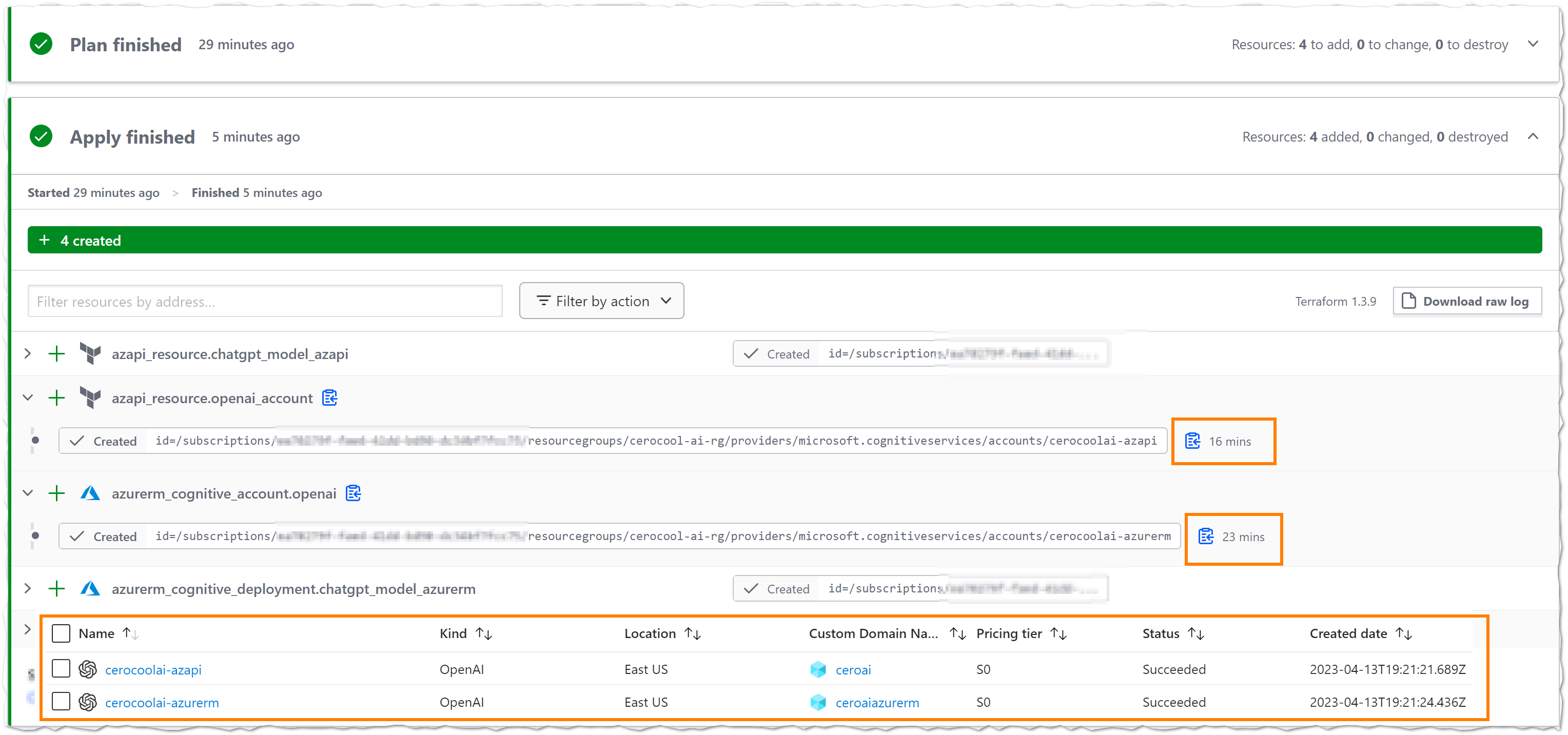

The resources created will look like below. However, what it really took my attention was the time of the deployment using AzAPI vs AzureRM provider. In this instance, the service took 16 mins to be deployed via AzAPI and 23 mins via AzureRM 🤔. Definitely, something I want to understand more as 7 mins difference seems quite a bit.

Private Endpoint for Open AI Resource 📝

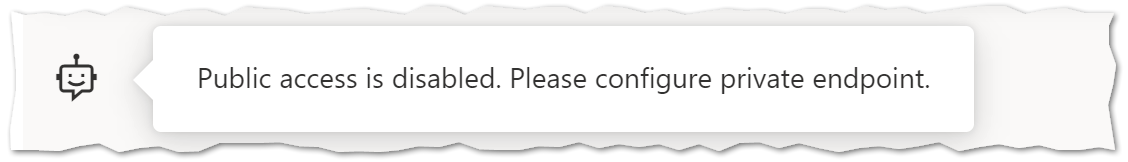

To create the private endpoint for the resource you can use the snippet below. You will of course require having the openai.azure.com private DNS zone created for OpenAI. The service actually will let you know as soon as you remove the public access on the Open AI Studio.

resource "azurerm_private_endpoint" "name" {

name = "openai-pe"

location = "westeurope"

resource_group_name = azurerm_resource_group.openai.name

subnet_id = data.azurerm_subnet.privatelink.id

private_service_connection {

name = "privateendpoint-1"

private_connection_resource_id = azurerm_cognitive_account.openai.id

is_manual_connection = false

subresource_names = ["account"]

}

}

resource "azurerm_private_dns_a_record" "openai" {

name = "cerocoolai-azurerm"

zone_name = "privatelink.openai.azure.com"

resource_group_name = azurerm_resource_group.openai.name

ttl = 300

records = [azurerm_private_endpoint.openai.private_service_connection[0].private_ip_address]

}

Summary 📻

In this blog post, we explored the Azure Open AI deployment process using terraform and highlighted some of the powerful capabilities that enterprises can leverage, such as Private Endpoint for enhanced security. We also discussed our surprise at the slow deployment times using AzureRM compared to AzAPI, though it’s worth noting that your experience may vary.

What’s next?

Last but not least if you want to be part of the Azure OpenAI GPT-4 Public Preview Waitlist please check here.

I do hope this helps someone and that you find it informative,, so please let me know your constructive feedback as it’s always important🕵️♂️,, That’s it for now,,, Hasta la vista🐱🏍!!!

🚴♂️ If you enjoyed this blog, you can empower me with some caffeine to continue working in new content 🚴♂️.

Comments