Deploying Private AKS using Bicep, because ARM is not strong enough :🦾!!!

Project Bicep is taking ARM to the next level and it is making lots of good noise in the IaC world. I have been using terraform for a while so I decided to check it out and see how it compares to HCL language to deploy IaC in Azure Platform and also to see how easy it is to author these kind of templates. ![]() Given that now Bicep support the creation of modules it makes it even more appealing, still early stages but looking forward to feature parity to terraform

Given that now Bicep support the creation of modules it makes it even more appealing, still early stages but looking forward to feature parity to terraform

Environment preparation for Bicep

What do I need to get started?

- I am using WSL2 on Win10 for my environment so I have updated my Azure CLI version to the latest, as it is required to be on v2.20 or later. Then I installed Bicep compiler running the command

az bicep install. - I installed the Bicep extension which definitely makes life so much easier as the intellisense is great and it makes authoring quite enjoyable

- I did rely on the Azure ARM Template documentation in order to check the values to use in the Bicep template regarding resource types similar to the Azure RM provider documentation in terraform. I had a few challenges here as Terraform API calls may use different variable names to interact with the Azure API’s.

AKS the terraform way

My terraform deployment uses modules stored in my Private Terraform Cloud Module repository. In that case, the structure of the deployment files looks as per below. The module structure in terraform is per below the right hand side. For instance, my deployment AKS Deployment will call all the approved modules to be consumed and deploy the whole solution.

AKS Deployment

|_main.tf

| |_ AKS Module

| | |_ main.tf

| | |_ variables.tf

| | |_ outputs.tf

| |

| |_ VNet Module

| | |_ main.tf

| | |_ variables.tf

| | |_ outputs.tf

| |

| |_ Subnet Module

| | |_ main.tf

| | |_ variables.tf

| | |_ outputs.tf

| |

| |_ Route Table Module

| |_ main.tf

| |_ variables.tf

| |_ outputs.tf

|

|_variables.tf

|_outputs.tf

|_providers.tf

The main.tf file of the AKS module looks like below with all the values to be provided by populating the variables. 👇

resource "azurerm_kubernetes_cluster" "test" {

name = var.name

resource_group_name = var.resource_group.name

location = var.resource_group.location

dns_prefix = var.dns_prefix

kubernetes_version = var.kubernetes_version

private_cluster_enabled = true

default_node_pool {

name = "default"

orchestrator_version = var.kubernetes_version

node_count = var.node_count

vm_size = var.node_size

os_disk_size_gb = var.os_disk_size_gb

vnet_subnet_id = var.node_vnet_subnet_id

max_pods = var.max_pods

enable_auto_scaling = true

min_count = var.auto_scaling_min_count

max_count = var.auto_scaling_max_count

availability_zones = var.availability_zones

}

addon_profile {

azure_policy {

enabled = false

}

}

role_based_access_control {

enabled = true

azure_active_directory {

managed = true

admin_group_object_ids = var.aad_group_id

}

}

identity {

type = "SystemAssigned"

}

network_profile {

network_plugin = "azure"

network_policy = var.network_policy

load_balancer_sku = "standard"

outbound_type = "userDefinedRouting"

service_cidr = "192.168.254.0/24"

dns_service_ip = "192.168.254.10"

docker_bridge_cidr = "172.22.0.1/16"

}

}

The deployment uses the provider.tf for the authentication to the Azure Platform for the deployment of the resources as such with a block similar to the one below when using terraform open source or you will have a service principal already assigned in TFC/TFE on the workspace for the deployment. Please refer to Terraform Configuration

AKS the Bicep way 🦾

Azure Bicep did not disappoint and by using, the VSCode extension intellisense I was able to write the module in a few minutes in a similar fashion to my Terraform Module, still not as robust as terraform but I guess it is early stages for Bicep. The file structure is quite similar except here at this stage I cannot have my variables/parameters and outputs on separate files. In addition, I do not need the provider file, as this is ARM after all so the context used (user/SPN/identity) will be used for the deployment.

AKS Deployment

|_main.bicep

|_ AKS Module

| |_ aks.bicep

|

|_ Network Module

| |_ vnet.bicep

|

|_ Route Table Module

|_ routetable.bicep

The aks.bicep file of the AKS module looks like below with all the values to be provided by populating the variables. 👇

param location string = resourceGroup().location

param AKSName string

param subnet_id string

param group_id string

param dns_prefix string

param kubernetes_version string

param node_count int

param auto_scaling_min_count int

param auto_scaling_max_count int

param max_pods int

param os_disk_size_gb int

param node_size string

resource aks 'Microsoft.ContainerService/managedClusters@2021-02-01' = {

name: AKSName

location: location

properties: {

kubernetesVersion: kubernetes_version

enableRBAC: true

dnsPrefix: dns_prefix

aadProfile: {

managed: true

adminGroupObjectIDs: [

group_id

]

}

networkProfile: {

networkPlugin: 'azure'

networkPolicy: 'azure'

serviceCidr: '192.168.254.0/24'

dnsServiceIP: '192.168.254.10'

dockerBridgeCidr: '172.22.0.1/16'

outboundType: 'userDefinedRouting'

}

apiServerAccessProfile: {

enablePrivateCluster: true

}

addonProfiles: {

azurepolicy: {

enabled: false

}

httpApplicationRouting: {

enabled: false

}

}

agentPoolProfiles: [

{

name: 'agentpool'

mode:'System'

osDiskSizeGB: os_disk_size_gb

count: node_count

vmSize: node_size

osType: 'Linux'

type: 'VirtualMachineScaleSets'

maxPods: max_pods

vnetSubnetID: subnet_id

enableAutoScaling: true

minCount: auto_scaling_min_count

maxCount: auto_scaling_max_count

orchestratorVersion: kubernetes_version

availabilityZones: [

'1'

'2'

'3'

]

}

]

}

identity: {

type: 'SystemAssigned'

}

}

output object object = aks

output aks_id string = aks.properties.privateFQDN

output aks_identity object = aks.properties.identityProfile

Definitely much nicer and easier to digest than an ARM in JSON format and quite similar to the terraform language layout at the end of the day same API calls 💪.

Module Structure using Bicep

Modules are called using the following syntax quite similar to terraform module blocks and supports depends on which was something introduced in terraform world from v13. However, versioning is not supported at this time as well as <module_path> it’s only local not providing enough flexibility and governance around module creation for reusability.

module <name> '<module_path>' {

name: <required name of the nested deployment>

scope: <rg/subscription>

// Input Parameters

<parameter-name>: <parameter-value>

dependsOn:[

<module/resource>

]

}

Solution Deployment Bicep Way

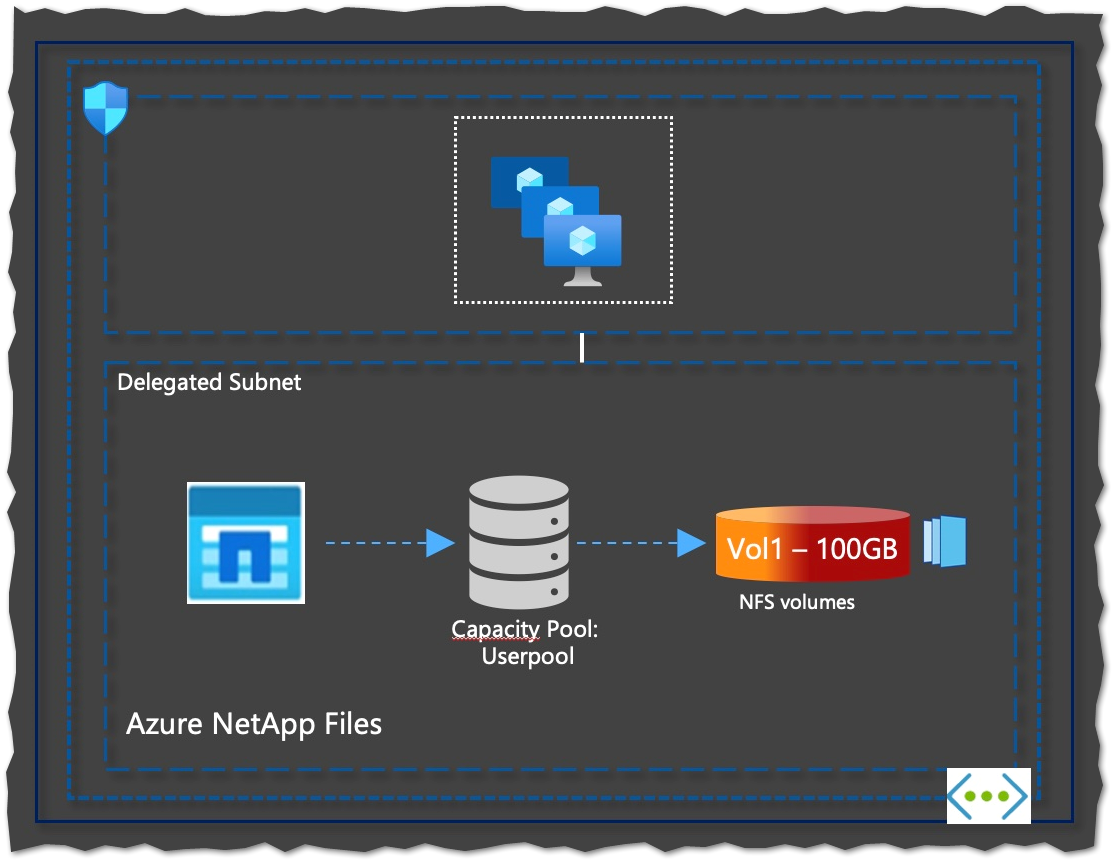

In order to deploy the whole solution. I setup a main.bicep which makes the calls to the modules for the deployment based on variables and parameters required for each of them. The file will look like below. Given I am deploying the Resource Group to logically group my resources my deployment requires to be scoped to my subscription

targetScope = 'subscription'

param location string = 'australia east'

var RgName = 'aks-rg-test'

var AKSName = 'aks-development'

var AKSvNetName = 'aks-vnet'

var AKSsubnetName = 'aks-subnet'

var AKSsubnetPrefix = '10.0.3.0/25'

//Resource group

resource rg 'Microsoft.Resources/resourceGroups@2020-06-01' = {

name: RgName

location: location

}

module vnet './vnet.bicep' = {

name: AKSvNetName

scope: resourceGroup(rg.name)

params: {

location: location

vNetName: AKSvNetName

address_space: '10.0.3.0/24'

SubnetName: AKSsubnetName

subnetPrefix: AKSsubnetPrefix

}

dependsOn: [

rg

]

}

module aks './aks.bicep' = {

name: AKSName

scope: resourceGroup(rg.name)

params: {

location: location

subnet_id: vnet.outputs.subnetid

group_id: 'bad1b814-cb6e-4027-afd2-ee0d27aef0e1'

AKSName: 'aks-dev'

dns_prefix: 'dev'

kubernetes_version: '1.18.14'

node_count: 1

auto_scaling_min_count: 1

auto_scaling_max_count: 3

max_pods: 50

os_disk_size_gb: 64

node_size: 'Standard_D2_v3'

}

dependsOn: [

vnet

]

}

output vnetid string = vnet.outputs.vnet_id

output aksid string = aks.outputs.aks_id

output aks_iden object = aks.outputs.aks_identity

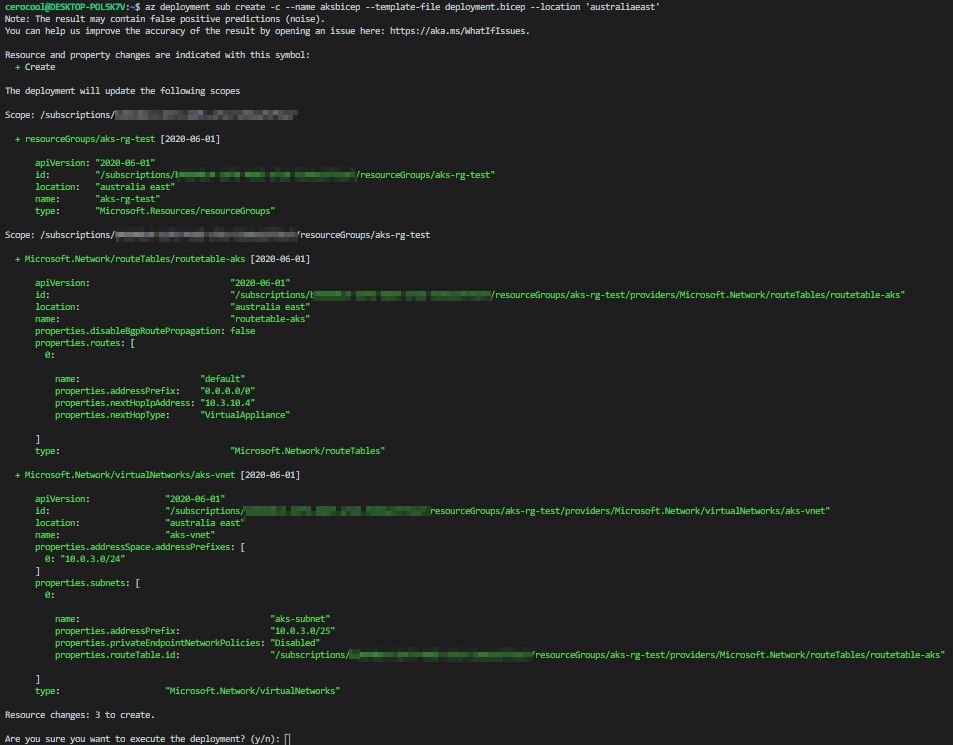

For the deployment I used Azure CLI and the deployment was just by running the below command. Where c short name for --confirm-with-what-if gives me a sense of a dry run similar to our terraform plan. We can get a JSON representation of a terraform plan like but I guess it will be a bit harder to inspect.

az deployment sub create -c --name aksbicep --template-file main.bicep --location 'australiaeast'

You can also have JSON representation for the changes by using the command below and you can potentially pipe the results so you can programmatically inspect those changes

az deployment sub what-if --no-pretty-print --name aksbicep --template-file deployment.bicep --location 'australiaeast'

Important I run this exercise using my account context with currently has a contributor role assignment. In enterprise scenarios, you will have a more comprehensive RBAC controls and potentially using CI/CD tools that will run under Service Principals or Manage Identities.

Summary

I really found Bicep so much better to deal with than ARM templates so if you are on an environment using ARM templates for all your deployments, Bicep will be a really good option to explore and the best of all is that you can get the latest released features so you will not have to wait for a release to start enjoying those new features. Still Bicep needs to mature a bit more to offer more flexibility but you can start getting the benefits already. Modules is something I hope it gets enhanced as this will definitely increase code reusability and will increase authoring time as you will remove copy/paste for different solution deployments

On the other hand If you are already using terraform you will be already enjoying a more mature product that is cloud agnostic in regards to its language. I will assume Bicep team will be making good efforts and make those awesome capabilities from Terraform available in Bicep. Project Bicep still will be native to Azure so it will not be a language you can use on other clouds.

If you are keen I found some motivation on the below great videos.👇

I hope it was informative, hasta la vista and keep working out those 🦾!!!

Code Download

The code I created for this demo is available in my blog repository Github.

🚴♂️ If you enjoyed this blog, you can empower me with some caffeine to continue working in new content 🚴♂️.

Comments